Google has quietly but significantly started an experimental Android app allowing users to download and execute AI models straight on their handsets totally offline. This program, called the Google AI Edge Gallery, has the potential to revolutionize the way that artificial intelligence is implemented, used and trusted by regular people.

While tech giants often herald their AI products with splashy announcements and polished marketing, Google took a different approach this time. The AI Edge Gallery was released without fanfare, tucked away in a GitHub repository and labeled “experimental alpha.” But don’t let the soft launch fool you this app hints at a future where powerful AI runs in your pocket, not in the cloud.

What Is Google AI Edge Gallery?

An Android app called Google AI Edge Gallery lets users explore, download and execute machine learning models locally. This means once you install a model, you don’t need an internet connection to use it. Whether you’re chatting with an LLM (large language model), analyzing an image or testing a text generation prompt, everything happens directly on your device.

The app is designed to support low-latency, privacy-focused and offline AI interactions. It’s the kind of tool that developers, privacy advocates and AI enthusiasts have been hoping for, especially as concerns grow over data privacy, surveillance and cloud dependence.

Currently, the app supports:

- Text-based chat with local LLMs (e.g., Google’s Gemma 2B models)

- Image-based queries (upload a photo and ask questions about its contents)

- Prompt lab features for summarizing, rephrasing and generating content using downloaded models

All of these are powered by models sourced from Hugging Face, downloaded on demand and optimized for local execution.

Why This Matters

Google’s new app isn’t just another AI tool it’s a quiet but powerful shift toward personal, private and offline intelligence. By bringing models directly to your device, it rewrites the rules for how we interact with AI.

1. Privacy: Your Data Stays on Your Device

Unlike most AI apps that send your queries to cloud servers, AI Edge Gallery keeps everything local. That means:

- No data is transmitted to Google or any third-party provider.

- Your photos, text and interactions remain on your device.

- You can run sensitive or confidential tasks, such as summarizing legal documents or drafting private messages without exposing them to the cloud.

2. Offline Access: AI Without the Internet

Imagine being in a remote area, a flight or a secure location with no internet and still having access to powerful AI tools. That’s exactly what this app enables. Once a model is downloaded you don’t need a connection to:

- Generate text

- Chat with a local assistant

- Analyze documents or images

This unlocks AI for millions of users in regions with poor connectivity or for industries where cloud access is restricted (e.g., military, health and field research).

3. Developer Playground

The app may be considered by developers as a Testbed for on-device artificial intelligence experimentation. Google has provided benchmarking tools, which let you examine how well your device runs with certain models measuring speed, memory use and latency.

Google has released the effort open-source under the Apache 2.0 license. Everyone may therefore fork the source, design their own tools and help to advance the field. This is a significant turn towards community-driven, transparent artificial intelligence.

A Closer Look at the Features

Google AI Edge Gallery isn’t just about running models offline it’s packed with hands-on tools that let you explore, experiment and interact with AI in ways previously limited to the cloud.

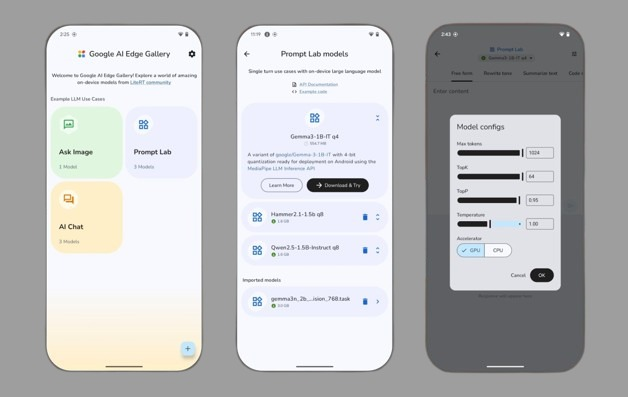

Model Browser

The app connects to a curated list of models hosted on Hugging Face. Currently, it supports several quantized versions of open-source large language models (LLMs), such as:

- Gemma 2B

- Phi-2

- TinyLlama

Users can browse the list, read model descriptions, and download the ones they want. Once downloaded, models are converted using LiteLLM and LiteRT, Google’s lightweight inference runtime optimized for Android devices.

Prompt Lab

This is your AI playground. You can feed the model text and perform tasks like:

- Summarization

- Translation

- Rewriting

- Custom prompt engineering

You also get control over key generation parameters, including temperature, max tokens and beam search settings. It’s a great way for prompt engineers and hobbyists to experiment with local model behavior.

AI Chat

This feature mimics what you might get with ChatGPT or Gemini but it all runs offline. Start a discussion by downloading a suitable chat model. It is compatible with:

- Multi-turn chat

- Local memory (within session)

- Quick response generation depending on your hardware

Ask Image

Take a picture or add one from your collection. You may then pose queries to the model, such as:

- “What is this object?”

- “What text is on this sign?”

- “Summarize this slide from the presentation.”

It’s not perfect, especially on lower-end devices, but it gives a peek into future multimodal local AI.

Performance: It Depends on Your Phone

AI Edge Gallery finds perfect modern Android phones. Devices running models more efficiently will have Tensor Processing Units (TPUs) or Neural Processing Units (NPUs). However, even phones devoid of specialized AI processors may rely on the CPU or GPU as a backup.

Lower-end devices might find it difficult to run big models or encounter delays. With continuous usage, battery consumption also starts to be a concern, particularly for vision-language jobs.

That said, the app includes performance dashboards to help users monitor and understand how well their device handles different models.

How to Install It

Right now, Google isn’t distributing the app through the Play Store. To install:

- Visit the GitHub repository: https://github.com/google-ai-edge/gallery

- Download the latest .apk from the Releases section

- Enable “Install unknown apps” on your device

- Sideload the APK and start using the app

The current release is version 1.0.3 (as of late May 2025) and Google is regularly pushing updates and bug fixes.

⚠️ Note: Since it’s still in alpha, the app might be unstable or buggy on some devices.

What’s Coming Next?

Google has hinted at a roadmap that includes:

- iOS support (a version for iPhones and iPads is in development)

- On-device Retrieval-Augmented Generation (RAG) so your offline model can search PDFs or notes stored on your phone

- Function calling, where local models can trigger apps, create calendar events or draft emails using on-device data

- Multimodal AI that accepts sensor, video and audio inputs

This future vision aligns with Google’s broader strategy of AI at the edge, allowing smarter, more responsive experiences that aren’t dependent on central servers.

Final Words

Google’s AI Edge Gallery might have launched quietly, but its implications are anything but small. At a time when users are increasingly wary of data privacy and network dependence, this app offers a refreshing alternative powerful AI that runs locally, privately and offline.

Whether you’re a developer, a privacy advocate, a researcher in the field or just someone who wants more control over your digital tools, this app is worth checking out. And as more models become optimized for on-device use and more capabilities are added, the age of cloud-independent AI could arrive faster than we think.

Have you tried AI Edge Gallery? Leave a comment with your thoughts or experiences!